A Bad Day – QNAP NAS Device RAID Volume Suddenly Gone

I’m going to talk about a bad day. It could have been a very very bad day, but it wasn’t.

Around 3am, one of our main storage devices restarted and when it booted - BAM “Pool 1 RAID Group 1 is inactive.” Excuse me? The drives are in RAID 10 and hadn’t reported any faults until this moment. Granted, this particular model of QNAP device, the QNAP TS-879U-RP, has caused us significant heartache in the past.

This time, the primary storage volume had completely disappeared and the group of drives it was on is now “inactive”. We have backups that will completely cover us, but we just had an outage a month ago so I don’t want to have this storage unavailable for 4-6 hours while we restore from backups.

What do you do when nothing else can go wrong? Try things you have no idea what you’re doing!

My main resources were:

- http://qnapsupport.net/how-to-fix-not-active-raid-after-4-1-0-firmware/

- https://itcp.freshdesk.com/support/solutions/articles/4000031084-qnap-raid-system-errors-how-to-fix

By ssh-ing into the device I could access the terminal to run a variety of commands that aren’t available in the lovely bloated broken frustrating web interface.

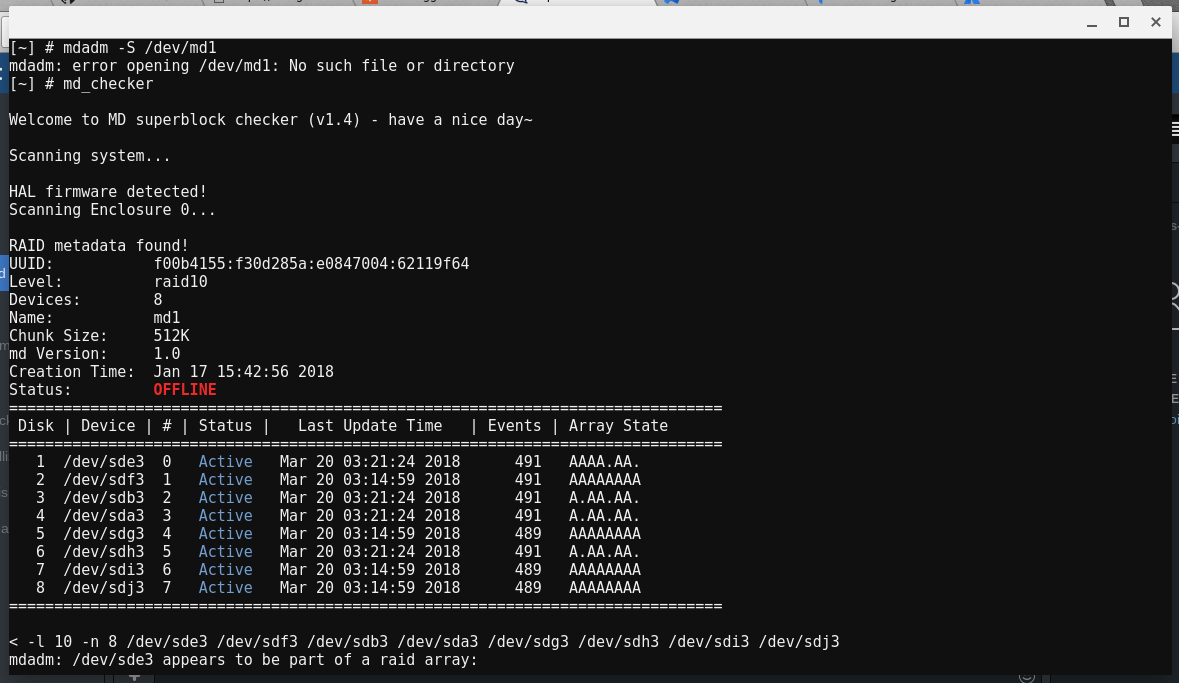

md_checker

md_checker gave me an idea of what the device saw from the drives - which was not good. 3 drives are reporting that they’re missing.

md_checker results

Recovering the Drives

I know that all the drives are just fine, so I want to try to put humpty-dumpty back together.

mdadm -AfR /dev/md1 /dev/sde3 /dev/sdf3 /dev/sdb3 /dev/sda3 /dev/sdg3 /dev/sdh3 /dev/sdi3 /dev/sdj3

mdadm -AfR is a gentle/safer way to place the drives back together, but it didn’t work in our scenario. It was good to get familiar with the ordering of the entire mount point and the disks in order as seem in md_checker.

/dev/md1: (the volume label from md_checker)

/dev/sde3: (first hard drive listed in the array and so forth)

mdadm -CfR –assume-clean /dev/md1 -l 10 -n 8 /dev/sde3 /dev/sdf3 /dev/sdb3 /dev/sda3 /dev/sdg3 /dev/sdh3 /dev/sdi3 /dev/sdj3

It was time to tuck your head between your knees, take a deep breath and accept that things aren’t going to go together easily but you still have a shot. This command forced the array to reassemble (good thing I had the drives in the right order in the command) but the volume remained offline.

-l 10: (RAID 10)

-n 8: (8 drives)

-assume-clean: (Ignore what we think about the drives and just go with it)

mount /dev/md1

I naively assumed that a old-fashioned mount command would bring the volume back to the QNAP device. haha, so silly.

/etc/init.d/init_lvm.sh

Fortunately, there’s a script on the device to handle these moments of bringing the volume back online. I was able to take a couple deep breaths and enjoy the rest of my day without a major outage window.