25 Days of Serverless – Day 3

Day 3’s challenge introduces webhooks – where a service/server broadcasts when something has happened by making an HTTP request to a specified URL. You provide the URL to the service and receive automatic updates. In the language of an Azure Logic App, a webhook is the selected trigger for the workflow.

In the case of this example, we are looking for notifications from GitHub about commits/pushes to a repository. The other portion of the scope of Day 3’s challenge is to take any png file(s) and store the file’s URL in a database. Because my database of choice is SQL Server/Azure SQL, I’m going to create a C# Azure Function to handle the incoming webhook and writing to a database.

Catch the whole first week of my efforts for 25 days of serverless here .

At the risk of being redundant, the Azure function will be deployed to Azure through a service listening to for a GitHub webhook on the code repo to trigger build and deployment.

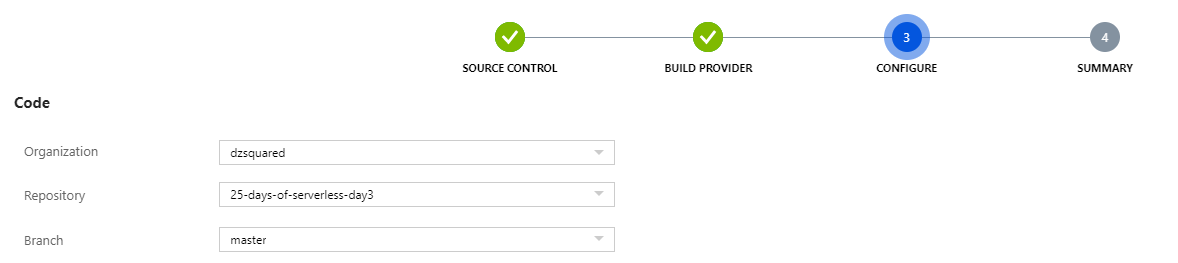

Create a new Azure Function in the Azure Portal – then go to Platform features. Find All settings under General Settings. From the now open App Service window, select Deployment Center from the lefthand side. Select GitHub (sign in as needed), then App Service build service – this will use the Kudu build engine to quickly build your code.

Building the Azure Function

The GitHub webhook is configured to send JSON – I’m not interested in playing with form-urlencoded data.

The template C# HTTP trigger function can fairly quickly be renovated into a function that will always return “success” but that crunches through the body of the POST request to look at each file added in the commit. The 2 nested foreach() loops navigate the JSON body and accommodate the potential of multiple commits being pushed at a time and multiple images being added at once.

[FunctionName("GithubWebhook")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = null)] HttpRequest req,

ILogger log)

{

log.LogInformation("GitHub webhook received");

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

foreach( dynamic commit in data.commits ) {

foreach( dynamic fileAdded in commit.added ) {

string fileName = (string)fileAdded;

log.LogInformation(fileName);

}

}

return new OkObjectResult("success");

}Get the File Names

Adding a reference to the Systems.Collections.Generic assembly with using System.Collections.Generic; we can create a list of strings before we iterate through the JSON body. We’re also going to split the fileName with the string.Split() function to check the file extension before we add it to the list.

[FunctionName("GithubWebhook")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = null)] HttpRequest req,

ILogger log)

{

log.LogInformation("GitHub webhook received");

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

var fileList = new List<string>();

dynamic data = JsonConvert.DeserializeObject(requestBody);

foreach( dynamic commit in data.commits ) {

foreach( dynamic fileAdded in commit.added ) {

string fileName = (string)fileAdded;

log.LogInformation(fileName.Split('.')[fileName.Split('.').Length-1]);

if ( fileName.Split('.')[fileName.Split('.').Length-1] == "png" ) {

fileList.Add(fileName);

}

}

}Store the File Names in a Database

I already have a “junk drawer” Azure SQL database setup – so I will throw the connection string from the Azure portal into the local.settings.json file. The property name is important – I used db_images.

To write to SQL Server/Azure SQL in C#, I need to add 2 assemblies (System.Data.Common and System.Data.SqlClient). Add them with a “using” statement in the c# file as well as to the csproj file. After you save the csproj file changes, execute dotnet restore from the terminal.

<ItemGroup>

<PackageReference Include="Microsoft.NET.Sdk.Functions" Version="1.0.29" />

<PackageReference Include="system.data.common" Version="4.3.0" />

<PackageReference Include="system.data.sqlclient" Version="4.5.1" />

</ItemGroup>If I expected to frequently have >10 rows to insert in the database, I would write this code segment differently. I’m assuming I will have 1-5 rows to insert for each webhook call so the next segment of code (after the list is populated) will:

- Check the full repository URL

- Get the connection string from settings

- Connect to the SQL server

- For each item in the list, execute an insert statement to add the full URL to the image file to the database

string repoUrl = data.repository.html_url;

var db_images = Environment.GetEnvironmentVariable("db_images");

if (fileList.Count > 0) {

using (var connection = new SqlConnection(db_images)) {

string sql = @"INSERT INTO CHRISTMAS_PNG (PNG_PATH) SELECT @PNGPATH";

foreach( string pngpath in fileList) {

using (SqlCommand command = new SqlCommand(sql, connection))

{

connection.Open();

var parampngpath = new SqlParameter("PNGPATH", SqlDbType.VarChar);

parampngpath.Value = repoUrl+ "/raw/master/" + pngpath;

command.Parameters.Add(parampngpath);

var results = command.ExecuteNonQuery();

}

}

connection.Close();

}

}

return new OkObjectResult("success");

}Deploy!

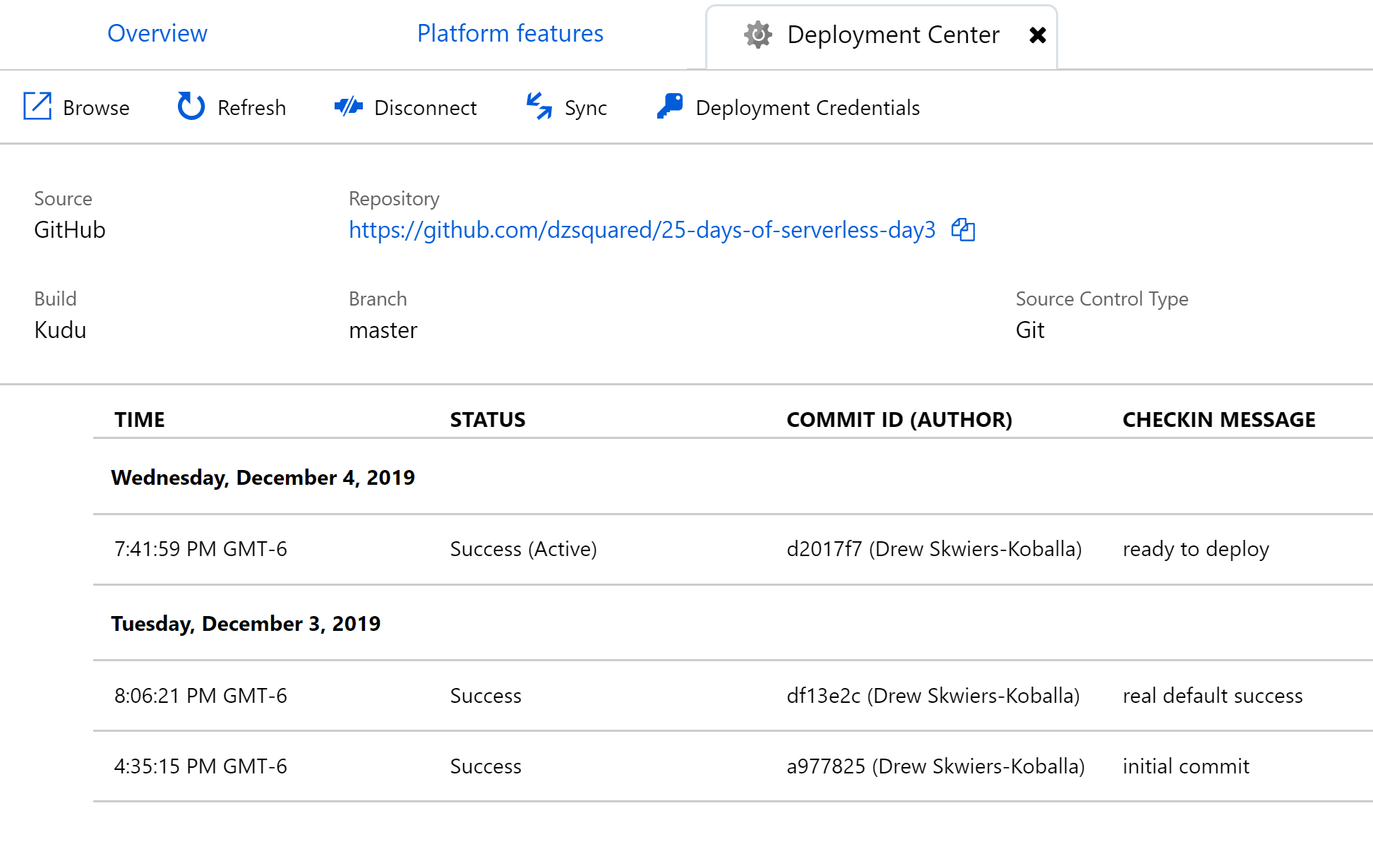

Due to the way we established our Azure Function – it deploys the code pushed to an GitHub repository – I can commit and push the code I’ve written (and tested) locally to GitHub and it will automatically deploy.

(and configure)

The local.settings.json file does not a) commit to Git b) push to GitHub c) deploy. This is good – since it contains our database user’s password. Before our Azure Function is complete, we need to add that same database connection string to the Application settings for the Azure function.